By Carly Burton, Lecturer in the Center for Integrated Design

Artificial intelligence (AI) has been around as a field of study and practice for decades; however, we are now witnessing a surge of practical applications combined with access to robust data sets that are changing the ways we experience and interact with intelligent systems. This fall I instructed a 5-week module on Design and Artificial Intelligence, with the objective to demonstrate how to use design as a tool for solving real-world problems—as AI becomes increasingly integrated into our everyday lives.

Students were encouraged to answer questions such as: What might a machine’s capacity to make self-guided decisions mean for human agency? What might data-driven operations mean for personal privacy? And most paramount and central to the course—if advancements in AI continue to challenge our norms of behavior and build entirely new experience paradigms, how can we best prepare for and navigate this future? What principles can we establish to guide the development and application of AI?

Understanding the Context

Before establishing a set of principles or building a solution, it is important to understand the greater context for which we are designing within. So, we started the course with looking at technology industry transformations and how they relate to greater social and behavioral shifts. For instance, within the health care industry, AI is raising questions regarding medical responsibility—doctors can use AI to aid in clinical diagnosis, but there is concern about their lack of transparency into the system and its influence on standard procedures. If AI becomes more statistically accurate at diagnoses than doctors, and is used for diagnosis, who is at fault when it makes a mistake? Socially and behaviorally, however, patients are exhibiting well established digital tracking routines to improve self-care; solidifying quantified self-movement and increasing health data sets. With patients progressively adopting digital tools for improved wellness—that uncover patterns within data—and AI expert systems that are designed to collaborate with providers, health care as we know it is set for a major disruption that could finally become what it’s supposed to be: patient-centered. This is just one case of the convergence of human behaviors with technological feasibilities that can spark a new experience paradigm.

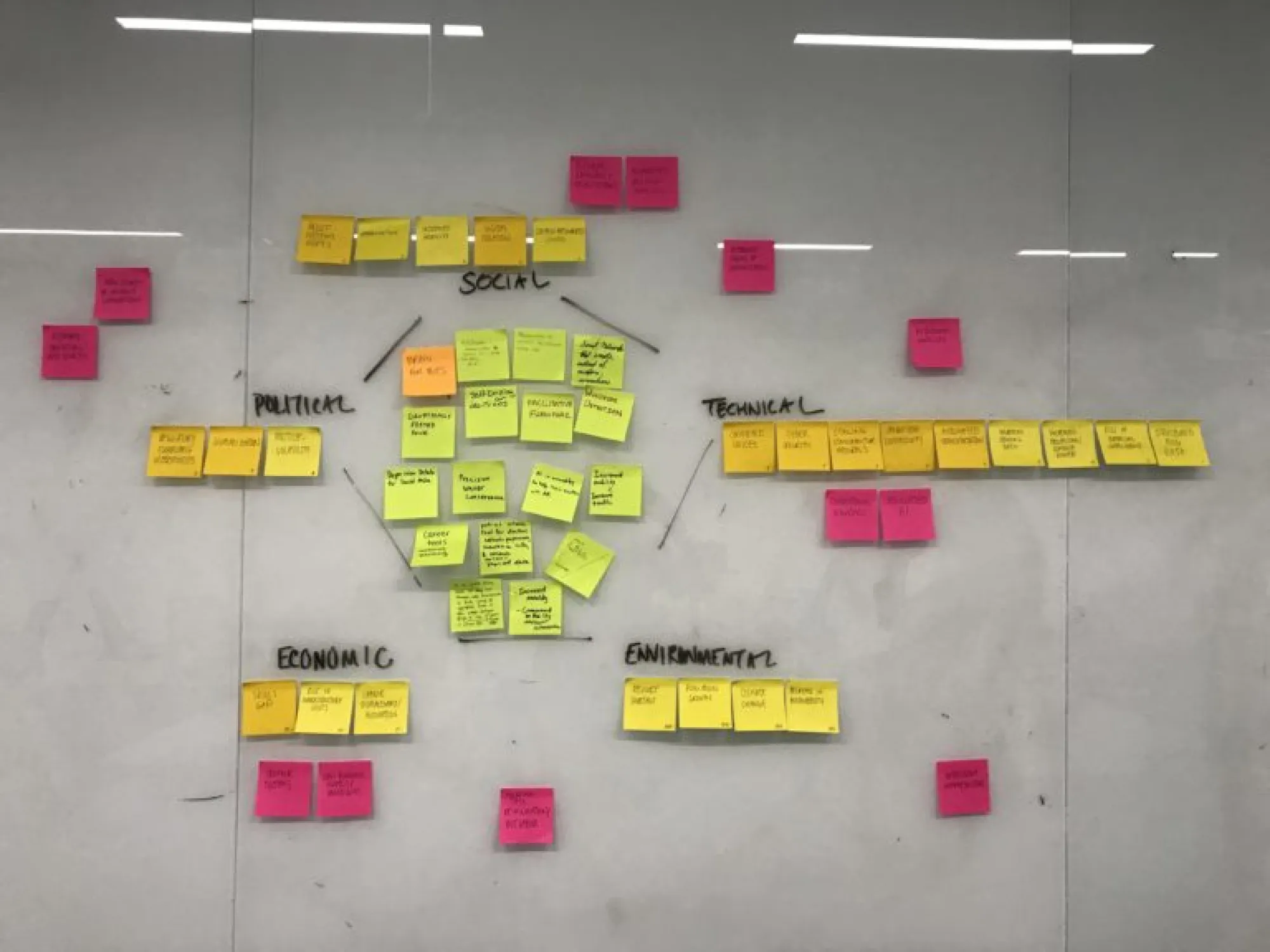

Identifying the Elements of Change

Within this analysis we also looked at the social, technical, economic, environmental and political elements at play to understand what forces are influencing the broader system in which AI is applied and adopted. We collectively found and discussed several drivers that are influencing how individuals are living their day-to-day, and what they may prioritize or adopt because of these emergent drivers. And further, what instances in which AI could flourish or fail because of certain factors. For example, on one hand, socially we are finding greater instances of digital isolation, while at the same time finding individuals who thrive from being networking into a digital social fabric. Each of these circumstances require nuanced manifestations of AI for it to positively influence a new stage of social living. Other technical and economic drivers directly related to applications of AI such as job automation and labor displacement, automated transportation, increased computing power and connectivity, are all forces accelerating the role AI can and will play in our daily lives.

Moving to Human Understanding through Social Inquiry

To artfully orchestrate an experience with systems of intelligence, we must root the design and development of artificial intelligence in human sciences.With the foundational knowledge of what forces are taking shape and how drivers are influencing the context in which we live, students were then asked to work in groups and use qualitative research methods to study people and their relationship to potential AI solutions. They were asked to frame their study on a specific industry case and glean insights that could directly guide new and ethical solutions.

Considering the compressed nature of the course, the social inquiry only scratched the surface to understanding how human beliefs and needs could influence instances of AI; however, each group was inspired and informed by the techniques they employed.

Generating Principles

Students discovered primary guiding principles that should be considered in the design and development of intelligent systems:

Humans maintain decision authority. Students found that human actors should have the ultimate decision. The capacity to make decisions as an individual is more valuable than the computer automating decisions. Further, the computer should then empower the individual to make the best decision and habits. In essence, the computer could become an aid to better personal enlightenment.

Humans as the primary actor. Similar to the desire to retain decision making responsibility, student groups found that individuals want to initiate the contact or engagement with the system on their terms. Specifically the system should not be self acting; rather the human is the primary actor to initiate the level and form of engagement.

Be relevant but provide moments of serendipity. If the system is to be intelligent, it should know your personal preferences and profile. Beyond providing personally relevant content and experiences, systems should provide discovery and allow exploration by the individual. Students found that closing the system could create adverse effects that hinder guided exploration and limit possibilities.

In my next post, I’ll discuss the design solutions from some of the student groups, as well as elaborate on additional principles that emerged from their qualitative research.